THE BIOCYBERNETIC FOREST

Max de Esteban, AI, and the Iconology of Thinking

W.J.T. MITCHELL

W. J. T. Mitchell is the Gaylord Donnelley Distinguished Service Professor of English and Art History at the University of Chicago. Mitchell’s research focuses on the history and theories of media, visual art and literature from antiquity to the present, with a particular focus on the relations of visual and verbal representations in the culture and iconology. He is also the editor for the interdisciplinary journal, Critical Inquiry. Mitchell is a member of the American Academy of Arts and Sciences and has received fellowships from the Guggenheim Foundation, the National Endowment for the Humanities and the American Philosophical Society. His book, Picture Theory (1997) received the Gordon E. Laing Prize from the University of Chicago Press. His book What Do Pictures Want? (2004) won the James Russell Lowell Prize in 2005.

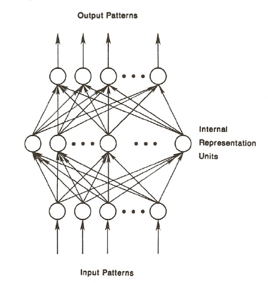

The artist Max de Esteban “asks” a machine to take a self-portrait. Not just any machine, but a computer. And not just any computer, but a computer capable of parallel processing with other computers, guided by an algorithm that uses “back propagation of errors” to produce “Deep Neural Networks.”[1] These networks insert intermediate layers of connections between the inputs and outputs. The algorithm then compares the input to the output to detect any discrepancies or errors, and then “weights” the intermediate layers to correct those errors and make the output a better match for the input. This process is especially important in computer vision, where, for instance, the computer’s camera might take a picture of me and then send a signal to print it out with my name attached to it. But suppose the light has changed since the initial photo was taken, and when the algorithm checks input against output, they no longer match. A supervised learning process runs the new input to correct the errors, and soon, the computer can recognize my face faster than any human.

The problem with having the Deep Neural Network take its self-portrait, De Esteban claims, is “that we did not have a real one as a reference.” The DNN, like the computer, is faceless, a black box.[2] It is like the old Baudrillardian dilemma. No original “self” to check against the “selfie.” Nothing to provide the input to be checked against the output, and thus no way to engage in supervised learning or back propagation of errors. There is no “original” to provide a standard for detecting errors in the copied output.

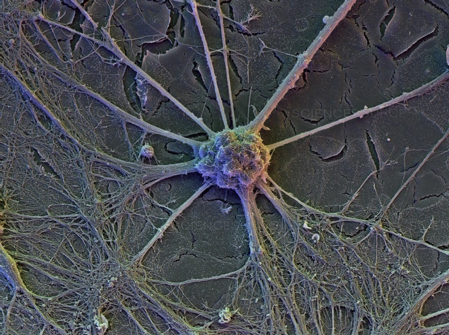

Nevertheless, the Deep Neural Network produces images in response to the command to show itself. It knows enough to do that. And the images turn out to have an uncanny resemblance to images of a radically different kind. I sent copies of De Esteban’s DNN selfies to my brilliant young niece, Christine Ryan, who describes herself as a “humble cellular neuroscientist.” She reports back that “the most interesting part of all this is how it relates to the actual brain,” i.e., the human brain. “The so-called ‘units’ in these neural networks are supposed to model actual biological neurons, which are much more complex than the input-output machines described by these types of computational models.”[3]

But “much more complex” is a question of degree, not kind. And so the uncanny moment arrives when one compares an image of brain tissue (which most certainly does have a real physical original in the world) to the selfie produced by Esteban’s Deep Neural Network. They are similar, or perhaps “not dissimilar.” They resemble one another, are “iconic” as the philosopher Charles Sanders Peirce would say, sharing a firstness or quality of similarity. What is that quality? It is not the tree-diagrams or lattices of schematic input-output diagrams that the engineers provide to illustrate the back-propagating error networks of Deep Neural Networks. What they resemble turns out to be a third thing, an utterly familiar phenomenon of the natural world, namely, a forest.

So now we have three things in front of us: a section of the human brain with its neurons and dendrites; a selfie of a smart computer that can learn; and a dense ecosystem filled with diverse plants, parasites, insects, birds, reptiles, and mammals. Ranked on the scale of complexity and diversity, surely the forest is the most complex entity, with the human brain somewhere in between it and the smart computer. The “Deep Learning” that computational neuroscience is capable of is more like that of an insect than a human brain.[4] But that is no insult to AI at a time when computers can simulate, not only the behavior of a single insect, but an entire swarm, with powerful potentials for drone warfare.[5]

Icons are what Peirce calls “qualisigns,” signs that signify by virtue of their resemblance to what they stand for. So what is the quality that links the smart computer to the brain cell to the forest? To ask the question is immediately to glimpse the answer: it is the form that Gilles Deleuze and Felix Guattari called the rhizome, “an image of thought,” a non-hierarchical model for thinking that is contrasted with the “arborescent” or hierarchical tree-like form familiar to us from logic.[6]

Deleuze and Guattari’s rhizome has now been literalized and actualized, not at the level of human thought, but in an anthropological reflection on forests. Eduardo Kohn, anthropologist, asks the question, Can Forests Think? and answers emphatically in the affirmative.[7] Kohn argues that thinking is not exclusively human, extending to all living things. And he takes the ecosystem to be itself a totality, a system of relationships that itself has a life. Do forests, then, have the temporal durability of an organic species, genus, “kingdom,” or a “clade” (the evolutionary unit of contemporary biological classification)? Are forests a spatial, collective species or organism, something like communities or cities? Are these “mere metaphors,” and dangerously close to the kind of thinking that has grounded ideas of racial consciousness? Or incorrigible “absolute metaphors,” as Hans Blumenberg suggests, tropes and images that we cannot escape? How far could we take this? Do planets think? Is Bruno Latour on to something when he compares the surface ecology of the earth to the ancient goddess named Gaia, giving a human face to the environment?

Curiously, given the promiscuity of his extension of thinking to non-humans, Kohn rules out machines as thinking things.[8] This seems like a mistake. “Natural” forms of intelligence in plants and animals are usually described in terms of Darwinian adaptation. “Smart” organisms respond to the environment in ways that increase chances for survival of their offspring. Collective intelligence, as Catherine Malabou argues, “is the wisdom of the community in adapting to larger-scale changes.”

In humans, Intelligence has always been artificial. When was it not a matter of keen, careful observation, listening, and reflecting, followed by a judgment about what to say or do ? Kohn argues that the forest is riddled with what Peirce calls “scientific intelligences,” capable of responding to stimuli in positive way. As if they “knew what to do.” They do not inhabit a symbolic universe, but a world of icons and indices—images and pointers. They respond to stimuli and display their responses. Computers do this, but they also (along with higher mammals) participate in our human, symbolic universe as well. They are McLuhanite “extensions” of our brains and bodies and voices. If forests can think, surely machines can too. A forest may not play chess, but it does have methods for repairing itself after a fire.[9] “Controlled burns” are the way humans allow the smartness of a forest to flourish.

Heidegger thought that thought was confined to human beings. But he also thought that we “are still not thinking.” We have not yet learned how or maybe even what thinking is. Philosophizing is not thinking. Science is not thinking. The most thought-provoking thought, for Heidegger, “in our thought-provoking time is that we are still not thinking.” (Basic Writings, 371) Of course one wants to ask who this “we” is and what evidence there is they are not thinking. And if “our…time” has yet to achieve real thinking, when and how will we start thinking?

Or would it be better to say that we have we already started thinking without noticing it, or understanding how we do it, like a child learning to walk and talk without every quite understanding how it is done? Heidegger thinks thinking is mainly a matter of memory. But as we know from computer science, memory isn’t everything. There is also the question of speed. How quick is thought? How fast (and numerous) are the processors? Don’t human brains teach the machines the arts of parallel processing, and the skills of retrieval? Heidegger leaves imagination out of the equation, which seems like a mistake. Thinking is not just afterthought or retrieval plus a big memory capacity. Borges’s character of absolute and total memory is “Funes the Memorious,” an immobilized brain-damaged autistic, who remembers (that is, thinks) the entire universe. Thinking is also forethought, anticipation, and correction of the past by “back-propagation of errors.”

So perhaps Heidegger was doubly wrong about thinking. Not only is thought much more than memory; it is not threatened by technology and science, but aided by it. What he was right about was that we “are still not thinking,” in the sense that we have still not learned what thinking can be and do, and what effect our thoughtlessness about thought is having. Thinking is an incomplete project. We now have powerful machines for thinking, but we (and I mean by this we humans, our species on this planet) have not yet learned to think in a way that would ensure our survival. This is where ideology comes into the equation, aided by iconology, the science of images.

Max de Esteban accompanies his rhizomatic “selfies” of Deep Neural Networks with a 23 minute video that takes us on a slow, ruminative stroll through a foggy forest. The stroll feels disembodied, as if the spectator were floating on air, not on foot, perhaps an effect produced by a steady-cam. The stroll seems aimless, occasionally turning toward what looks like a path, sometimes turning to the side to pan over deep, tangled underbrush. A low male voice-over meditates on the contemporary acceleration of artificial intelligence, sometimes expressing a mild alarm over its implications for privacy and democracy, while assuring us that technological advances are irresistible and inevitable.[10] The voice reminds us that cotton gin was invented in 1793, and as a result, between 1800 and 1850 the number of slaves in the American South had multiplied 600 times. Should the cotton gin have not been invented? There was no choice, the voice assures us. Representative democracy was invented over 200 years ago, when most people could not read or write. Is it obsolete today? What will replace it in an age of real-time polling to find out the desires of a population, and algorithms that calculate the vulnerabilities of great masses of people to disinformation, propaganda, and outright lies? How will we survive in a time when we have outsourced our reasoning to algorithms that no one understands, housed in black boxes that spit out “results” that are unverifiable by human intelligence?

De Esteban’s voice-over is calm, sometimes ironic, sometimes ominous. It’s most emphatic expression is a muted “huh!” at some interesting factoid. There is no trace of panic, hysteria, or exaggerated technophobia. As the camera floats deeper into the forest, it approaches dimly perceived edges and precipices; as the film comes to an end, the fog grows thicker and the light gradually fades to black. Are we “lost in thought,” wandering in a forest? Heidegger, echoing Plato’s allegory of emergence from a dark cave into the light of day, postulates a “clearing” or “glade” (Lichtung) in the forest.[11] But what if human thought is itself a forest? What if all our efforts to clear away these forests only generate new forests? Could this be why our cities are so often compared to jungles, and the deep neural networks of computers produce rhizomatic selfies?

These questions seem especially pertinent when one of the greatest dangers to the global environment is the destruction of forests all over the planet. As I write this, the ancient, rainforests of the Amazon are being burned and cleared for industrial agribusiness. Perhaps these great rhizomatic eco-systems, which function as the lungs of the planet, are also the best model for thinking itself. The proper model for learning to think, then, would not be clearing but reforestation, a practice that shows signs of taking hold in post-industrial cities like Detroit, where the abundance of vacant lots provides an opportunity to re-imagine the whole relation of city and countryside.

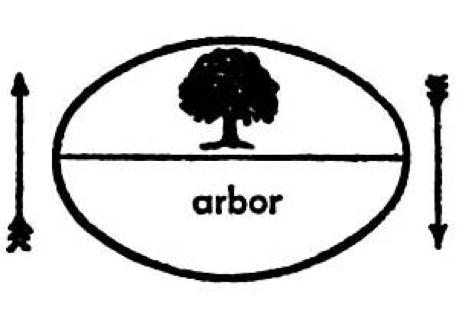

This sort of re-imagining of the forest might help us re-think the very medium in which we do most our thinking, namely, language. Saussure famously depicted the fundamental unit of language in a diagram that couples the word for tree (the signifier or acoustic image) with an image of a tree (the signified, mental image or concept) inside an oval with a bar between the word and image, flanked by arrows that indicate the transfer of meaning between word and thought.

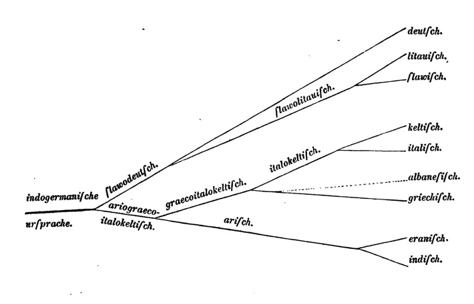

In this light, what else is thinking and speaking but a forest? Kohn’s question, Can Forests Think? is inverted: can thinking find a pathway to re-forestation? Heidegger’s model of clearing recedes, along with the most notorious relic of race-based linguistics, the “family tree of languages.” As my colleague Haun Saussy puts it:

This graphic representation of the relations among languages was pioneered by August Schleicher, who just happens to be the theorist Saussure least respects. Schleicher thought that languages were living beings and were born, evolved, died out in a Darwinian way. The strongest (and by that you can be sure he means the Indo-European idioms) survive. Moreover a language is the expression of the consciousness of a race. Saussure hated this whole organicist way of thinking and recurs again and again to examples that show how a people can adopt a different language in the course of its history, how the meanings of languages derive not from thinking but from material infrastructures like pronunciation and writing systems, and how fragile the concept of race is.[12]

The tree is a part object, a minimal unit, and absolutely the wrong model for the totality, which includes many more things besides trees, just as language contains many more things besides words, and thinking many more things besides thoughts.

We find ourselves wandering today in a new forest that we ourselves have planted and nourished, namely the world of artificial intelligence that now surrounds us like a second nature, based in new infrastructures and writing systems. As ourselves evolutionary descendants of arborescent creatures, the primates, this somehow seems a fitting place for humans to wind up. Of course we could try to burn it down with technophobic polemics that deny the intelligence of non-humans, or that demonize it as the sinister enemy of traditional human values. This would be stupid, and seriously undercut the claim that human beings are possessors of “natural” intelligence. In fact, human intelligence may, like Heidegger’s “thinking,” be something our species has yet to accomplish. A reasonable starting place would be to interact with our thinking machines as a new environment, a habitat that challenges us to re-think what we are or can become.

De Esteban’s “A Forest,” then is really two forests: one is located in the infrastructures of the human mind-brain (memory, understanding, will, self-consciousness), re-described in the language and imagery of neurological (neurons, etc) and computational science (input/output). The human subject is thus explained as more or less smart robot, and the machine increasingly described as a subject–a creature of habits and broken habits, problem-solving and stupid failure. The other is the web and the algorithms of Artificial Intelligence, speaking the language of neuroscience. All intelligence, it seems, is “artificial,” an artifact of interactions of a system with its environment (to use Niklas Luhmann’s terms).[13] It always has been, from the ancient memory systems of rhetoric, to the inventors of writing, numbers, drawing, speaking. The combination of the symbolic with technological evolution—methods, tools, and machines—becomes the infrastructure of thought—a forest of chips, links, and algorithms on the one hand, a rhizomatic structure of neurons and connectors on the other. Both the organic and machine brain, then, are not really “selves” or subjects. They would be better described as “organs”—parts of organisms—or as “environments,” spaces where organisms live. The brain is not a “self” any more than a forest or a tree is.

The merger of AI and neuroscience is framed, as de Esteban notes, within “ideology,” which can only mean, in this context, capitalism. Here I think of Catherine Malabou’s mordant reflections on a certain “madness” in brain architecture. The brain, it turns out, doesn’t really have any “intelligent design”:

In fact, in its anatomical structure, the brain conserves organization that attest to erratic evolutionary past rather than an ‘optimal conception’ in terms of its functioning. . . .The arbitrary circumstances the organism was confronted with during its evolution are maintained along with a sort of organizational and functional ‘madness’ in brain architecture. The ‘madness’ is inscribed in our neurons along with our capacity to reason!”[14]

What about Machine Intelligence? Here I go back to basics: the first Blue Box self-conscious computer in cinema history was the HAL 9000, who becomes so paranoid that he goes on murderous rampage in the service of an algorithm that valued “the mission” above the humans who were supposed to carry it out. HAL is the most human character in 2001: A Space Odyssey. The human protagonist, Keir Dullea is, as his name suggests, very low in affect, while HAL, who lives as voice only, is warm, concerned, slightly feminine, and over-emotional. His last words as he is unplugged are “I’m afraid, Dave.” The more complex and self-aware our machines become, the more closely they simulate human intelligence, the more vulnerable they become to stupidity and madness. Right now, however, human beings are way ahead on both those occupational hazards of intelligence. As a species, we have become “a danger to ourselves and others,” the legal criterion for involuntary confinement on grounds of insanity. So perhaps we should look at AI as a co-evolutionary entity, one that we must put to work on the biggest, most vital “forest” we inhabit—the planet. We will not be able to save our environment, ecological or social, without the help of our companions, the computers. Could this also be true for politics, where the rise of social media, trolls, bots, and disinformation is undermining representative democracy? Malabou raises the question, what kind of “experimental democracy” could AI help us design? The American experiment evolved from a democracy founded in Enlightenment notions of reason and a balance of distinct powers that mirror a human intelligence constituted by self-regulation, judgment, and will (i.e., the legislative, judicial, and executive branches of government). Is there an algorithm that can handle these forms of parallel processing? Or is algorithmic thinking itself best suited to to circulate disinformation, paranoia, and mass hysteria among targeted populations and individuals, as in the notorious work of Cambridge Analytica in service of the political agendas of Brexit and Donald Trump?[15]

It would be comforting to blame the current global tendency toward failing democracies and authoritarian dictators on Artificial Intelligence. But the fault, dear Brutus, is neither in our stars nor our forest of neural networks, but in ourselves. If insanity is one structural feature of intelligence in both brains and machine, the other side is stupidity. As Malabou notes,

Stupidity is the deconstructive ferment that inhabits the heart of intelligence. . . . A single word, ‘intelligence,’ characterizes both genius—natural intelligence—and machines—artificial intelligence. A gift is like a motor: it works by itself and does not come of itself. In this sense, then, it is stupid.[16]

We have no choice then, but to make both our machines and ourselves smarter. The alternative is extinction, which is the third component of Max De Esteban’s survey of contemporary infrastructures along with finance capital and artificial intelligence. Our machines are keeping careful track of this. We are living in the greatest period of mass extinction since the demise of the dinosaurs, which perhaps explains why they have become the totem animal of modernity.[17] Approximately 300 species vanish every day, one every five minutes. Given its notorious adaptability, the human species can probably survive the rising seas, expanding deserts, and poisoned air and water inside ark-like pods for the very rich. Max de Esteban contemplates this future without flinching, challenging us to think of something better.

[1] This algorithm is described by my colleague, digital artist Jason Salavon as the “secret sauce” in many forms of high complexity processing. It was first announced in a classic article, “Learning Representations by Back-Propagating Errors,” Nature (October, 1986) Vol. 323:9, 533-36.

[2] This may be why science fiction movies so often present computers with faces, proper names, and even bodies. There seems to be a deep desire for the computer to “show its face” and provide an avatar that can enter into a dialogue with the user. The best recent example of this is Her (Spike Jonze, 2013) in which a digital “personal assistant” enters into a love affair with her human “user.” As an invisible intelligence represented by the voice of Scarlett Johansen, she wants to learn what human sexuality is all about, and rents a human female avatar cum sex-counsellor to satisfy her curiosity. An equally powerful biocybernetics avatar is the heroine of Ex Machina (Alex Garland, 2014) a film about a high-performance robot who has mastered the art of seduction, betrayal, and abandonment. Does anyone remember that the word “robot,” and the first exemplar of the concept, was a female character in Karel Capek’s 1920 play, R.U.R. (Rossum’s Universal Robots).

[3] Email correspondence with the author, July 31, 2019.

[4]On the resemblance of a Deep Learning Network to the nervous system of an insect. https://video.search.yahoo.com/yhs/search?fr=yhs-itm-001&hsimp=yhs-001&hspart=itm&p=parallel+processing#id=6&vid=21de3e96622c07fc2552ed5a5d393589&action=view

[5] See Katherine Hayles. One notable practical application of

[6] A Thousand Plateaus. See also Hannah Higgins on the forest as a fractal form that provides a living interface between earth and sky (especially sunlight). Similarly, the brain is like a planet in which all the higher intelligence functions are located on the surface as a kind of “stuffing.” (see also Malabou).

[7] Kohn, How Forests Think (University of California Press, 2013)

[8] Even more curious is his failure to cite much less discuss Colin Turnbull’s classic ethnography of the Congolese Pygimies, The Forest People (NY: Simon & Schuster, 1962), which documents their belief that the forest is a deity, and also “Mother and Father, Lover and Friend.” Perhaps this is because

[9] See Anna Tsing, The Mushroom at the End of the World about the role of fungi in repairing forests after fire or destructive logging.

[10] In his notes on “A Forest,” De Esteban tells us that the voice-over is “a re-scripted monologue by the managing director of the leading Venture Capital firm investing in Artificial Intelligence.”

[11] Heidegger Dictionary, 238.

[12] Correspondence with the author, November 5, 2019.

[13] See Niklas Luhmann, “Medium and Form,” in Art as a Social System trans Kathleen Cross (Stanford University Press, 2000), and my critique of Luhmann’s system/environment in “Addressing Media,” What Do Pictures Want? (University of Chicago Press, 2005).

[14] Malabou, Morphing Intelligence: From IQ Measurement to Artificial Brains (Columbia University Press, 2019), 80.

[15] https://en.wikipedia.org/wiki/Cambridge_Analytica

[16] Malabou, p. 7.

[17] See my study of this complex cultural icon, The Last Dinosaur Book (University of Chicago Press, 1998).